Trust, AI and The Human

INSIGHT - HELEN BLAKE

Customer trust is the most valuable asset a company can have. It has a clear financial impact; it is fluid, dynamic and always contextual.

At a time when we are sifting through misinformation (suspected as being AI generated), ‘mis-speaking’, fake news, frauds and scams attempted on a daily basis and navigating polarised opinions on pretty much everything that touches our lives, now more than ever we need brands, businesses, and employers we can trust - organisations that are transparent, empathetic, easy to deal with and actually do what they promise.

What is trust anyway?

Trust is the basis on which we build relationships; it is what makes us human. In a business context, our customers trust our companies and brands to deliver what we say we are going to do – our promise to them. For some of us in business, trust may seem abstract yet we know it has measurable implications. We all spend time and money with the brands we love. We recommend them to others and we may pay more for their excellent service. The great thing is that trusted companies can recover speedily from unfortunate incidents.

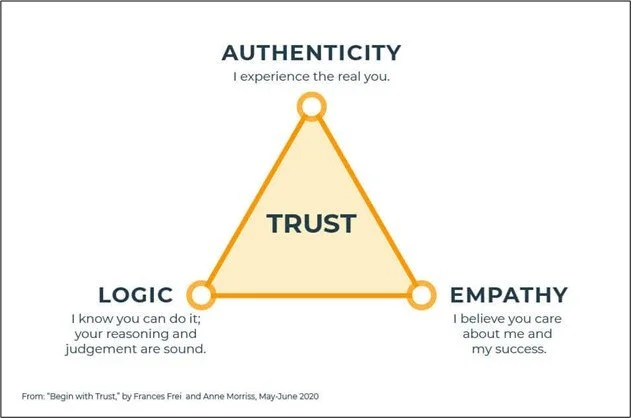

According to Frances X. Frei and Anne Morriss in Harvard Business Review, trust is the result of a combination of the three qualities shown below in the Trust Triangle: Authenticity, Logic and Empathy. When trust is lost it is because there is a breakdown in at least one of these three areas.

As business has increased AI adoption, customer trust in AI has decreased

This is the dichotomy highlighted in the recent Salesforce survey ‘State of the Connected Customer.’

We know the potential of AI is vast and the great business results that have and can be achieved. AI enables innovation, efficiency, automation of routine tasks, personalisation of customer experience as well as analysing vast amounts of data faster with predictive applications.

Nevertheless, customer concerns are growing around other aspects of AI, particularly privacy and transparency. Some B2B and B2C customers believe companies do not respect their personal data. Although 42% of customers trust businesses to use AI ethically, this percentage is significantly lower in 2025 than it was in 2023 - down by 16% from a 58% rating.

Customers want to know when they are dealing with AI (72% call for more visibility) and although business buyers are more open to collaborating with AI for efficiency, they generally still prefer human expertise and intervention on complex, high risk matters.

Being mindful of the Trust Triangle above can help us to challenge our AI strategies and ensure appropriate levels of personalisation and transparency. Asking for too much data, particularly sensitive business information, can soon drive buyer mistrust and feelings that the business is transactional, impersonal and inauthentic. In other words, a business that is too self-orientated to build customer rapport and good working relationships.

Understanding how our customers trust us

Doing business is about delivering value to customers beyond the direct product or service provided, including how we make our customers feel during their dealings with us at every touchpoint. At Futurecurve, we talk about the humans in the system and the rational, emotional and socio-political aspects of customer value.

Working with Futurecurve clients around ways of reinforcing trust with their customers, the Trust Equation by Charles H Green is always worth exploring:

Source: The Trusted Advisor Associates & Charles H. Green

The equation shows that trust is diminished if a person or a company is seen to act in their own self-interest. The more we experience this self-orientation the less we trust them. If a customer experiences the company AI as the ‘self-orientation’ of the business, offering little or no value to the customer, then trust will be diminished and lost.

To give you a starter, our research highlights the following approaches frequently help build customer trust when AI is involved:

Put yourself in your customer’s shoes and experience your AI strategies as a customer. How does it make you feel? Confident and reassured or frustrated and irritated?

Positively manage your customers’ AI experiences. It shouldn’t be a secret or a mysterious occurrence - let customers know what content is AI generated and how they can request a switch from an AI agent to a human

Customers are people, not data points, and AI strategies need to be fair and unbiased. If decisions are being made using AI processes these need to be clearly explained to the customer, providing them with the opportunity to challenge the decision if they believe an error has occurred. Regular audits for AI bias have uncovered unexpected and some unwanted results for a fair number of businesses

Be clear about your data privacy and ethics policies. Ensure you are not collecting unnecessary information about customers. Let customers know why their data is required and give them ultimate control over it

Customers are generally happy to use AI agents but need to know they can involve a human when needed – this level of human oversight is reassuring

Be straightforward and easy to do business with. Communicate in a clear, open and honest way, demonstrate your understanding of customer expectations and that you keep your promises - business mantras that can be sustained by both AI and the humans in the business.

AI’s potential is vast, particularly when it enhances, rather than replaces, human empathy, judgment, creativity, and problem solving. However, trust and value go hand-in-hand and why we at Futurecurve research these factors together. Understanding the rational, emotional and socio-political factors of customer value is vital to building trust.

Managing trust proactively is important. It can’t be left to chance. Taking a strategic view of your value proposition can enable you to build trust equity. If you would like to know more, check out our white paper Harnessing your Customer Truth.